LeapScan - Leap Motion 3D Scanning

I bought a Leap Motion years ago and despite a few hack day projects here and there it doesn’t get much use aside from gathering dust in one of my cupboards.

During lunch with a friend we got talking about 3D scanning tools I figured I’d see if the Leap could do it as aside from a 1st generation Kinect I don’t have anything which has decent stereo cameras.

A brief search online didn’t turn up much, and definitely no existing projects or any decent code for doing generic 3D scanning with the Leap Motion so I thought I’d have a quick attempt over a weekend.

The attempt was mostly a failure, but I thought I’d write a short blog post detailing why I thought it may work and how I went about creating a small proof of concept.

The Leap Motion is a small USB device with 2 infrared cameras that can measure finger/hand movement very accurately (reportedly down to 0.7mm accuracy). To do that it uses stereoscopic images from the cameras and some proprietary code that, according to them, does “complex maths” to calculate the positions of hands/fingers above the sensor.

For scanning regular objects in 3D the most common “cheap” and non-contact methods typically involves casting a point, line or pattern onto the object in front of a single camera and seeing how the point, line or pattern deforms to work out what objects the point, line or pattern has landed on and how far they are from the camera to generate a depth map. If the camera/object can move those depth maps can be stitched together into a point cloud.

Another method, though less reliable, is to use two cameras a set distance apart and compare the two images. This is the same method that we use to see with depth perception.

The Leap Motion with its two cameras at a fixed distance apart seemed to me like the ideal hardware for me to try and do this with. The Leap had only recently added raw camera images to their API at the time of writing, previously they only sent back finger and hand positions and thankfully this was exposed in their C++ API. I chose C++ as anything image and maths-heavy I’m probably going to use OpenCV as well and I could do with all the performance I could get.

The images the Leap returns are, by default, fairly distorted due to the lenses on the cameras. Thankfully the API also exposes the “calibration map” which you can use to fix the distortion.

As someone who was very much a C grade maths student I’m usually more than happy to utilise existing libraries to handle some of the heavy lifting. When it comes to 3D scene reconstruction using stereoscopic images there’s a lot of maths required. Thankfully for me OpenCV already has some functions for this.

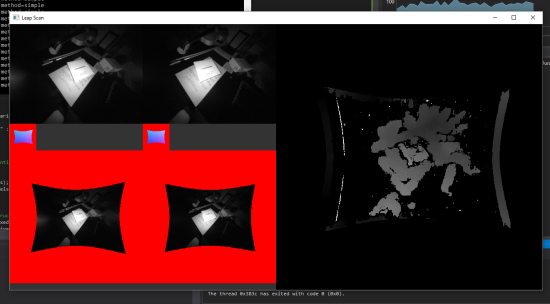

I used SFML, my go-to library for most things graphical in C++, and set up a debug GUI showing the images from the Leap at various stages of processing as well as the output of the OpenCV 3D scene reconstruction on the right as seen in the image at the top of this post (click to view it fullsize).

Unfortunately I couldn’t seem to find any combination of parameters to the OpenCV algorithms that would result in a good output. Sometimes the output was “okay” but nowhere near what I was hoping for.

There are a few enhancements I could make, pushing more of the processing to the GPU and trying some other reconstruction methods, but I think I’ll probably just park the project and hope that one day Leap Motion expose point cloud data from their API or share some of their secrets on how exactly they get such accurate tracking.

If you own a Leap Motion and want to give my code a go it’s hosted on GitHub: https://github.com/Palmr/LeapScan